I’ve been messing around with Spark for a few months and dabbled in it with a few work projects, but I recently decided to really get stuck in and understand it. While testing out Spark’s map() and flatMap() transformation operations, I thought I’d post some of my findings here to save myself having to look it up in the future and hopefully help someone who stumbles upon this.

map() vs flatMap() What’s the difference?

map()

- Applies a function to each row of a Dataset and then returns the results to a new Dataset.

Example:

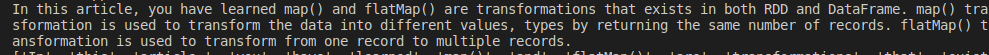

Input a flat file containing a paragraph of words, pass the flat file to the map() transformation operation and apply a function to each row in this case a python lambda expression used a split method converting a string into a list.

# Input a flat file with words.

input = sc.textFile("testing.txt")

# Split the results to a list

words = input.map(lambda x : x.split())Before

After

mapFlat()

- The transformation works by flattening the dataset or dataframe after applying a function. It will obviously return more rows than the original Dataframe.

- Often referred to as a one-to-many transformation function.

Example:

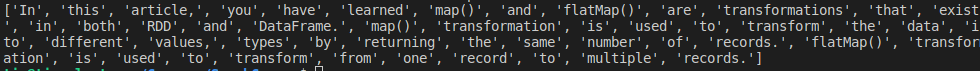

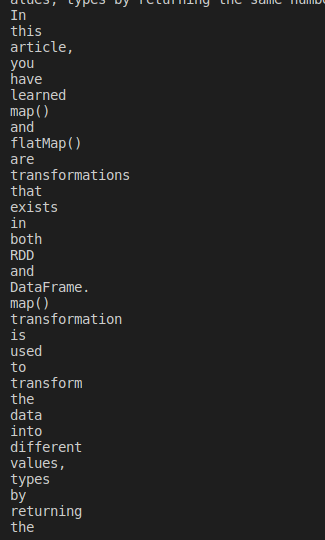

Using the same example above, we take a flat file with a paragraph of words, pass the dataset to flatMap() transformation and apply the lambda expression to split the string into words.

input = sc.textFile("testing.txt")

words = input.flatMap(lambda x : x.split())Results

As you can see all the words are split and flattened out. Job done!

Further reading

To dig deeper into map and flatMap check out the following resources.