If you’re a starting out as a Data Engineer and using AWS, then life gets a whole lot easier with the use of Boto3, the AWS SDK for Python. Boto3 simplifies integration of your Python applications, libraries, or scripts with AWS services like Amazon S3, EC2, DynamoDB and more. Well, that’s what the documentation says.

Basically, It lets you directly create, update, and delete AWS resources programmatically using your Python scripts. In this post I will give you a quick run through of the following:

- How to create a bucket.

- How to upload a file to that bucket.

- List your buckets and objects inside a bucket.

- Deleting a file from a bucket.

- Dropping a bucket entirely.

Prep work

I assume you’ve created a user account on AWS using AWS IAM and generated yourself an Access key with programmatic access.

Once once you have that, you will need to install the AWS CLI on your operating system. The process is straight forward and you can use the AWS documentation for guidance.

Update your credentials file on your system with your Access Key and Secret Key and we are good to go. The file is normally found in this location on Linux: ~/.aws/credentials

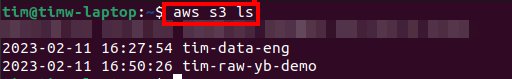

A quick check to see if all is working as it should (after all of the above) is to do a aws s3 ls in your terminal. If you get a list of buckets printed out you are golden and can move on.

Boto3 Installation and use

I suggest you set yourself up a Python virtual environment and install Boto3 using the pip installer.

pip install boto3To use resources within AWS using Boto3, you invoke the resource() method of a Session and pass in an AWS service name. For full list of services Boto3 allows you to use check out this list (prepare to scroll there is a lot)

# Example resources you can invoke

sqs = boto3.resource('sqs')

s3 = boto3.resource('s3')

ec2 = boto3.resource('ec2')

transcribe = boto3.client('transcribe')How to create a bucket

In all cases you first create a client for S3 using boto3.client('s3').

import boto3

s3 = boto3.client('s3')

bucket_name = 'your-bucket-name'

# creates a new S3 bucket in the default region

s3.create_bucket(Bucket=bucket_name)

# To specify a a specific region

s3.create_bucket(

Bucket=bucket_name,

CreateBucketConfiguration={

'LocationConstraint': 'eu-central-1'

}

)You can see in the above code you have two options. Option one, creating a bucket and let AWS create it for you in your default region, if you’ve specified it in you creditails file, or option two, you can tell AWS exactly where you want your bucket created. In this case eu-central-1

How to upload a file to that bucket

import boto3

# Set up S3 client

s3 = boto3.client('s3')

# Set bucket and file name

bucket_name = 'your-bucket-name'

file_name = 'path/to/your/file.txt'

# Upload file to S3 bucket

s3.upload_file(file_name, bucket_name, file_name)

In the above you specify the file and the bucket you want to upload to. Finally, you use the upload_file method of the S3 client to upload the file to the bucket.

It goes without saying, but you will need to have the appropriate permissions and credentials set up to access the S3 bucket.

List your buckets and objects inside a bucket

import boto3

# Set up S3 client

s3 = boto3.client('s3')

# List all buckets

response = s3.list_buckets()

buckets = [bucket['Name'] for bucket in response['Buckets']]

print("List of Buckets:")

print(buckets)

# List all objects in a specific bucket

bucket_name = 'your-bucket-name'

response = s3.list_objects_v2(Bucket=bucket_name)

objects = [obj['Key'] for obj in response['Contents']]

print(f"List of Objects in {bucket_name}:")

print(objects)To list we use the list_buckets method of the S3 client to list all the buckets in your AWS account. The response returned by list_buckets is a dictionary that contains a list of bucket names under the key ‘Buckets’.

To list the objects in a specific bucket, you can use the list_objects_v2 method of the S3 client and specify the bucket name. The response returned by list_objects_v2 is a dictionary that contains a list of objects in the bucket under the key ‘Contents’. You can use the ‘Key’ attribute of each object to get the object names.

Deleting a file from a bucket

import boto3

# Set up S3 client

s3 = boto3.client('s3')

# Set bucket and file name

bucket_name = 'your-bucket-name'

file_name = 'path/to/your/file.txt'

# Delete file from S3 bucket

s3.delete_object(Bucket=

Deleting a bucket is surprisingly simple we specify the S3 bucket name and the file name you want to delete. Finally, you use the delete_object method of the S3 client to delete the file from the specified bucket. No warning no double checking just delete and it’s gone. Simple yes, dangerous in the wrong hand definitely!

Note that once you delete an object from an S3 bucket, it cannot be recovered. Even if you’re Jeff Bezos it’s gone!

Dropping a bucket entirely

import boto3

# Set up S3 client

s3 = boto3.client('s3')

# Set bucket name

bucket_name = 'your-bucket-name'

# Delete bucket from S3

s3.delete_bucket(Bucket=bucket_name)Finally, you use the delete_bucket method of the S3 client to delete a bucket. I’ll be honest I can’t see any reason why you would be dropping a bucket though code like this unless you are cleaning up a ton of them, but maybe I’ve just never had to do it. Still nice to know you can.

Side note you can only delete an S3 bucket if it is empty. If there are any objects in the bucket, you will need to delete them first before deleting the bucket. Yes, it gets me more times than I care to admit.

I hope that gives you a little insight into how easy Boto3 makes working with AWS especially for a Data Engineer.

Tim